Story

Changmin Lee

My Story

Story

"How does AI learn like humans?"

My academic journey began with this fundamental question. While leading the academic club BAMBOO, I explored the principles of AI with my peers. Through various projects, I realized that many AI methodologies mimic the principles of nature and life, much like how the human visual cortex inspired CNNs.

This insight led me to Brain Decoding. I realized that a deep understanding of the brain could be the breakthrough for next-generation AI. Now, I aim to realize the "AI-to-Brain" paradigm—using advanced AI models not just to mimic the brain, but to understand it.

Vision

Research Vision

Vision

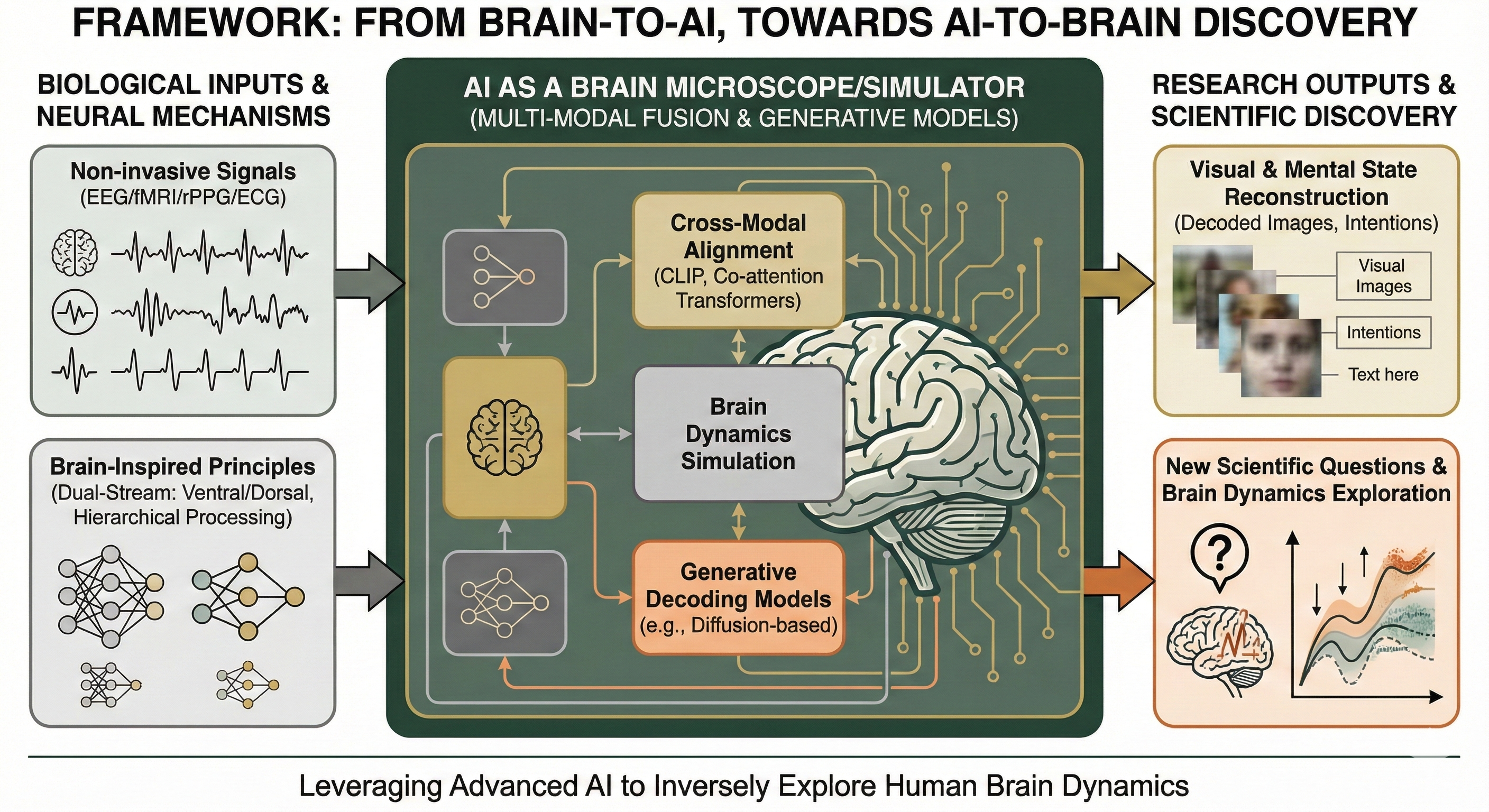

"From Brain-to-AI, Towards AI-to-Brain"

I aim to go beyond simply creating AI that mimics the brain.

My ultimate goal is to use highly advanced AI models as a 'microscope' or 'simulator'

to inversely explore the human brain, formulating new scientific questions and finding answers.

Figure 1. Proposed Research Framework: Leveraging Advanced AI to Explore Human Brain Dynamics

Interests

Research Interests

My research goal is to internalize the brain's computational principles into AI models and use them to explore the brain again.

Brain-Inspired AI

Developing AI architectures inspired by neural mechanisms, such as the dual-stream visual pathways (ventral/dorsal) and hierarchical processing.

Brain-Computer Interface

Decoding human intentions and visual experiences from non-invasive signals (EEG, fMRI). Building practical BCI systems for reconstruction.

Multi-modal Learning

Integrating various modalities—vision, language, and physiological signals—to create comprehensive AI models (e.g., EEG-fMRI fusion).